Compute Express Link™ (CXL™) is a new, high-speed CPU-to-Device and CPU-to-Memory interconnect designed to accelerate next-generation data center performance. UEFI and ACPI specifications provide standard interfaces for discovering new system attributes and as such need to be extended for discovering, enumerating and configuring CXL devices and CXL-capable systems. Many members of the UEFI Forum are also members of the CXL Consortium, which has helped foster a community focused on the improvement of the UEFI and ACPI specifications to better support CXL-based systems and devices. Enhancing these specifications allows them to deliver improved support for CXL devices and expands UEFI and ACPI technology ecosystems to cover CXL systems. In this blog, we will provide a background on CXL Consortium and technology, their links to the UEFI and ACPI technologies and plans for enriching the UEFI and ACPI specifications.

Compute Express Link

The CXL Consortium was created in 2019 as an open industry standard interface for high-speed communications.. CXL technology is constructed based on the PCI Express® (PCIe®) specification physical and electrical interface. CXL provides high bandwidth and low-latency coherent interconnects and addresses high-performance, computational workloads across Artificial Intelligence (AI), Machine Learning (ML), High-Performance Computing (HPC) and many other market segments.

CXL dynamically multiplexes three protocols over the PCIe 5.0 architecture physical layer. The three protocols include CXL.io, CXL.cache and CXL.mem and all can flow simultaneously, ensuring that the packets are sent on the same physical bus, one after another. CXL.io provides I/O semantics like PCIe specifications and is used for enumerating CXL devices. CXL.cache enables accelerators and processors to share the same coherency domain and manage and cache each other’s memory. CXL high-speed interconnect can be used to connect CPU and workload accelerators like GPUs, Field Programmable Gate Arrays (FPGAs) and other specific accelerator solutions. CXL.mem provides memory semantics that allows expansion of memory by attaching a device to CXL beyond what is possible via traditional DDR DIMMs. All CXL systems are, by definition, heterogeneous computing systems that consists of different accelerators with various classes of memory and performance characteristics coexisting in the same system. By enabling memory coherency between the CPU memory space and memory on attached devices, resource sharing is enabled and delivers improved performance as well as reduced overall system cost and software stack complexity.

The CXL specification 1.1 has been available to the public since April 2019 and CXL capable systems are expected to come out soon. That emphasizes the need for standardization at the firmware and software stack, with a focus on several technologies including UEFI, ACPI and PCI Firmware architecture. These technologies provide the necessary interfaces between the firmware and the operating systems to support new use cases of accelerators and memory expansion across different implementations.

UEFI and ACPI Specification Overview

The UEFI specification plays an important role in the CXL ecosystem and presents a consistent view of CXL to the shrink-wrap operating systems. The ACPI specification describes such systems to the Operating System (OS) for optimized workload placement or memory location, directly benefitting CXL. Both UEFI and ACPI specifications are well-established and managed by a standards committee with broad industry participation, enabling engagement in an open source project and allowing developers to leverage existing work completed by community members. This community-developed effort encourages contributions and networking amongst individual UEFI Forum members that choose to adopt CXL.

Proposed Enhancements for UEFI and ACPI Specifications

Members of the CXL Consortium who are a part of the UEFI Forum are contributing to the development of UEFI specifications by submitting Engineering Change Requests (ECRs) and proposals for enhancing the UEFI and ACPI specifications. These proposed enhancements will allow the OS to discover CXL buses and devices on CXL and manage them either natively or through System Firmware. These enhancements include certain new ACPI tables, data structures and UEFI protocols that would work for CXL, as well as other similar coherent interconnects. Collaborating with the UEFI Forum to enable the firmware vendors and system software vendors paves the way for standard operating systems to then discover and exploit CXL capabilities. UEFI Forum members can view the UEFI and ACPI change request list and Work in Progress specifications for more information.

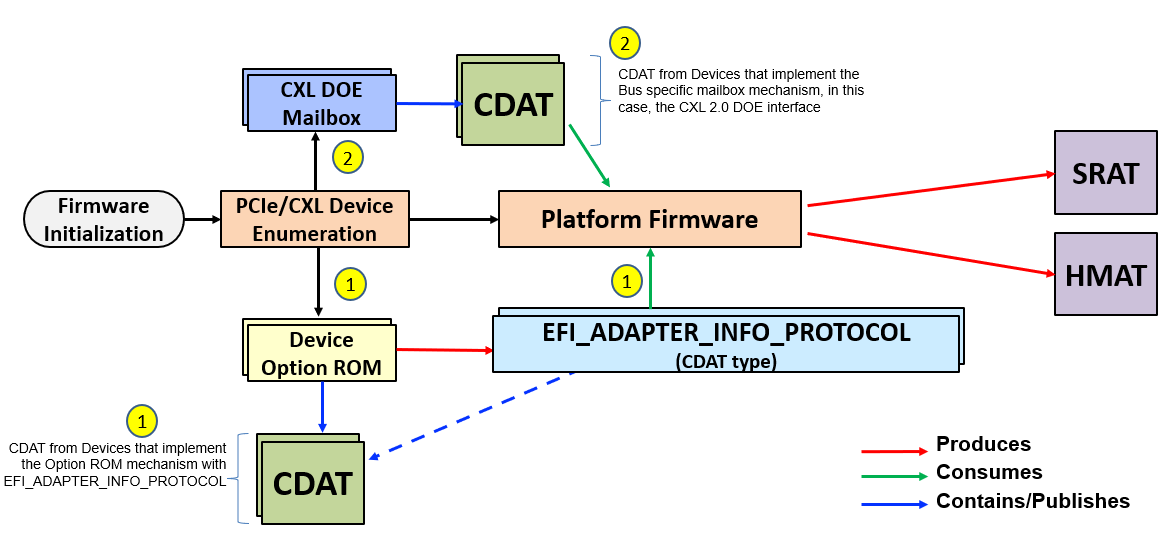

Coherent Device Attribute Table (CDAT) is a good example of the types of changes CXL brings about. CDAT is a data structure that is exposed by a CXL component and describes the performance characteristics of that components. CDAT structures can be discovered and extracted from devices or switches during boot (by the system firmware) shown in the Figure below. CDAT content can be used by system firmware to construct ACPI SRAT and HMAT tables.

Figure 1Pre-boot CDAT Extraction Method (for CXL devices)

Watch the Recent CXL and UEFI Webinar

If you would like to learn more about this topic, a recent presentation as part of the UEFI 2020 Virtual Plugfest called “Compute Express Link: Proposed Enhancements to UEFI and ACPI Specifications” is available to watch anytime on the UEFI Forum YouTube channel or BrightTALK channel.

About the CXL Consortium

For additional information on CXL, check out its Resource Library:

- White paper: Introduction to Compute Express Link

- Webinar: Introduction to Compute Express Link (CXL) Webinar Presentation

- Webinar: Exploring Coherent Memory and Innovative Use Case

Compute Express Link™ and CXL™ Consortium are trademarks of the Compute Express Link Consortium.